How to make auto-generated videos feel human-like

Passing the Video Turing Test

Our customers have one big problem in common: their software changes constantly and with every release, their video libraries drift further and further out of date. So we built Videate to address this issue through automation.

Render a video once, and keeping your videos up to date with the latest release is as easy as clicking to re-render. One of our big challenges is to make sure the end video product is the best it can be. We strive to produce videos that are so good, so human-like, the viewer can't tell it's made through automation.

The road to "human-like" is interesting, because on that journey to "perfection", we actually have to embrace the imperfections.

Because those little imperfections are what make things feel human.

How the technology works

We strive to make video production as easy as writing or importing documents. In our customers' words, it's "magic" and "revolutionary."

To quote Arthur C. Clarke's Third law:

"Any sufficiently advanced technology is indistinguishable from magic."

What may appear to be magic to our customers is actually new technology for building videos at a moment's notice. When we embarked on this mission, we ventured towards the seemingly impossible.

Now, it's a reality.

Let's take a quick look at the technology and how it works.

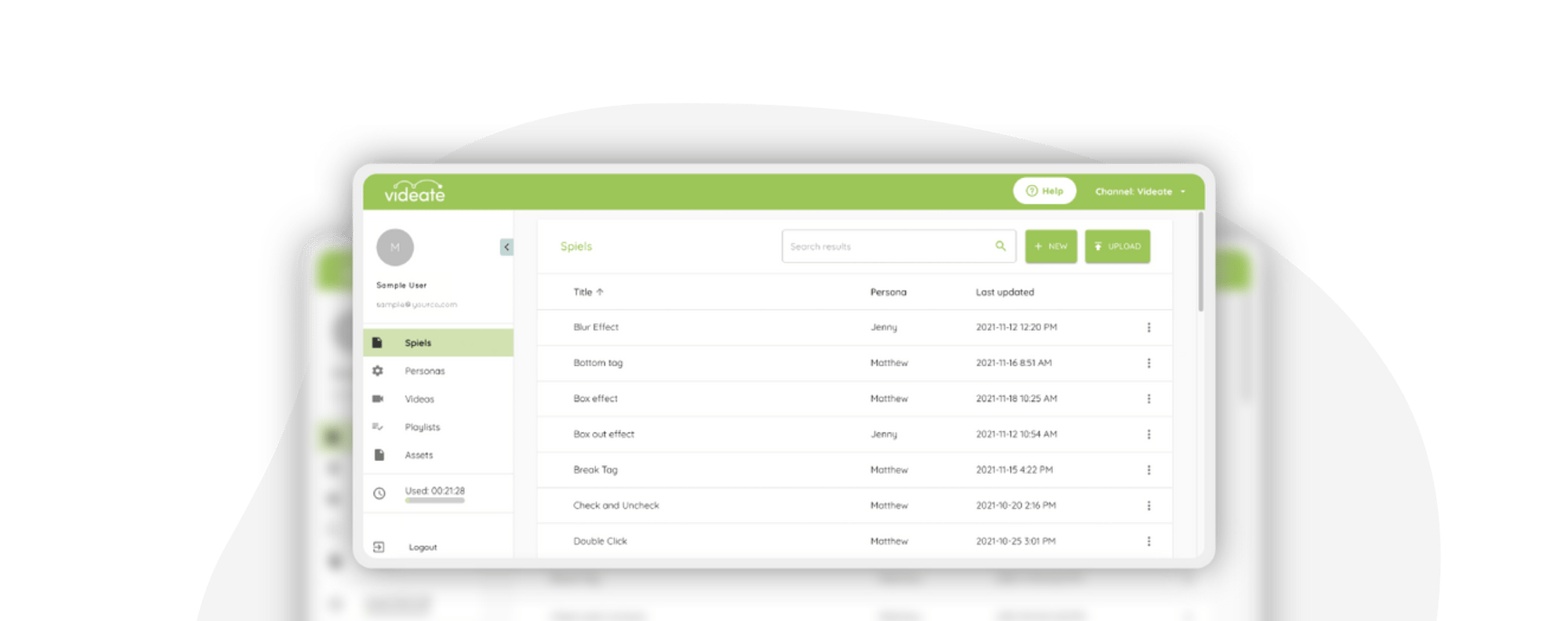

First, we ingest a document (could be Google, Word, XML, DITA, or other structured formats). Then we convert the behaviors described in the document into our machine-readable format, Spiel XML.

Think of Spiel as an assembly language for our rendering engine. As the engine deciphers the Spiel, it's making decisions about what to do and how to do it in the most efficient and convincing way.

The first step in the automation process is to log in to the target software, usually a test or staging server. Videate reaches into your software and extracts the exact information needed to find what you want to do. You simply paste that into your document via annotations, and you are ready to Spiel.

If the document says, go to "Settings > API Integrations", our platform finds those elements on the browser page and performs the mouse movements required. If it says "enter your email and first name", it starts typing.

"Click save"... no problem.

The engine controls the flow and adds in special effects and transitions. To perform those annotations, it manipulates the underlying product. Traditional video editing programs require manually defining boxes to highlight or outline as an overlay in post-production. As the product evolves, it all must be redone.

Since we have control of the product while rendering the video, we can highlight HTML elements from within the page, providing an "inlaid" effect. Videate produces the video in one take. As a result, the post-recording editing phase people go through to clean up the video is unnecessary.

When the take is complete, Videate encodes the final video in several different formats. It can also stamp the video with closed-captions in one or more languages.

The Original Turing Test

In 1950, Alan Turing developed the "Turing Test" to assess a computer's intelligence. The test includes either a machine or a human hidden from an interrogator: The interrogator asks questions to determine whether they're talking to a machine or a human. If the machine's answers pass for human, it passes the test.

As video automation continues to improve, it's getting harder and harder to tell whether videos are made by humans or machines. If viewers can watch a video for five minutes and not notice it was auto-generated, we consider that passing the Video Turing Test.

Human-like video: perfectly imperfect

It's not easy to make a video produced by automation feel human-like. Our minds pick up on the slightest of imperfections. Yet the imperfection with auto-generated video is actually perfection! When it's too good, too smooth, something feels off. The chaos that humans generate when interacting with a computer is not something you notice ... until it's missing.

A human recorded video could be poorly edited. It might include verbal stutters, stammers, and long pauses. There may be periods of dead time, jump-cuts, or inconsistent audio. These nuances become tells, and the lack of their presence feels sterile.

Yet, professional voice talent, clean editing, and smoothly consistent on-screen actions make a video feel robotic. Even when produced by people.

While everything we do is a delicate balancing act that will enable us to pass the Video Turing Test, our goal for now isn't to make the absolute most human-like automated video. Instead, we strive to create a great experience that doesn't distract the viewer.

Wabi-sabi, or the art of asymmetry, is the name of the game. We've worked hard to include flaws in what is otherwise a very precise set of movements and actions. The right amount of human-like chaos makes the end product feel, well... right.

When it's perfectly imperfect, the viewer forgets the video was made by automation and not a human. When we achieve that, we pass the Video Turing Test.

There are two main categories that make up our version of the Turing Test: voice and human behaviors.

Video Voice

We use text to speech generators to make the video voice overs. Videate utilizes voices from several different providers, including Microsoft , Amazon Polly, and IBM Watson. Providers have gotten incredibly good at making human-like text-to-speech voices. It's already difficult to tell the difference between a synthetic versus human voice.

One component to keep in mind when picking the right voice is timbre. Timbre is the quality of sound. The other component is the shape of the sentences - inflecting in the right way, and emphasizing the right words, pausing enough (like where a person would take a breath) and handling punctuation properly.

Providers use Al to figure this out. Synthetic speech handles short sentences just fine.

But the longer a sentence runs on, the harder it gets to make it sound just right. That's usually where the listener starts to notice the voice isn't human.

This is why we recommend customers adjust their video scripts to align more with how people talk versus how we write documentation. Shorter, more direct sentences translate better in the synthetic voiceover.

Harnessing the power of text-to-speech generation

We listen and talk to devices all day long: Alexa, Siri, Google and even radio advertising. We're used to hearing these generated voices in our daily lives. Text to speech engines were fairly good when we originally launched Videate, and in just a few years they've gotten really good.

Providers like Amazon Polly, and Microsoft Cognitive Services already generate high-quality, custom voices for customers. The "Space Race" for voice is moving fast.

We expect synthetic speech to get better and better, making video voiceovers indistinguishable from a human's. And since Videate is speech engine agnostic, we can layer in audio from any source. Customers can pick and choose which synthetic voice sounds best for their brand.

Human behaviors

Another major aspect of the engine is the ability to mix-in behaviors and speech at the same time. This is what people naturally do when recording videos. You will notice mouse movements, clicks, scrolls, and typing while the engine is speaking. Without these interwoven actions, the videos feel slow and contrived. With them... it's like magic.🪄

In the early days of developing our engine, we would do something, then say something (rinse and repeat). However it didn't feel right. We need the engine to speak, move the mouse, and apply special effects all at the same time. The timing has to be perfect. We invested a lot of time into launching multiple events with parallel paths of execution while it's speaking.

We focus on simulating human behaviors in two areas: Mouse and Keyboard.

Mouse movement that feels organic

With mouse movements, a mouse cursor consistently going too perfectly and too quickly from-peint A to point B feels robotic. The key to making mouse movements feel human-like is keeping it "slightly flawed."

You don't want that perfect swipe, you want it to maybe swoop a bit, or overshoot the button and come back-to almost see the human hand at work moving their device on a desk.

When the mouse navigates around the screen, we use a number of algorithms to simulate the movements of a trackball, a mouse, a touchpad, or a library of precise curves. It takes a lot of precise math to make mouse movement look natural! We observed tens of thousands of mouse and touchpad movements to get this right. That, and several hundred recordings of co-founder and CEO Dave Gullo himself moving his mouse around on a screen :)

Videate offers a synthetic mouse movement in addition to the more human-like one, but we've found that our customers primarily use the human-like, "slightly flawed" version.

We also included mouse-based callouts. Like when you move a mouse back and forth or circle something on screen to emphasize an element or call attention to something.

Adding keyboard actions back in

As to the keyboard aspect, we've been careful to include little things that make the experience seem like a human is typing. For example, inserting micro times between keystrokes, a brief silence where you can imagine somebody "moving to hit the spacebar, or the sound of pressing "enter."

We've worked hard to synchronize all of this with what's going on onscreenThis is the kind of stuff you don't really think about until It's missing, and when it's missing, the experience feels robotic and jarring

Additional "human-like" details

We have many different sound libraries available that play mouse and keyboard sounds (we see you, TikTok). When you watch a human-produced instructional video, you usually hear these things. When the viewer expects these sounds, even subconsciously, the lack of them becomes glaring.

Plus, reinforcing screen actions with sounds keeps the viewer's attention focused in the right place. Our customers can build a custom persona that provides voice characteristics, mouse and keyboard sounds, behavioral rates, custom colors, and effects that fit their brand or preference. We continue to add more special effects all the time that help drive the viewer's focus.

Closing the gap

With Videate's new innovations and a constant balancing act, we are closing the gap towards passing the Video Turing Test. Many clients already believe we pass and that's why they've joined this revolution. Our present workflow enables customers to produce and update an entire video library on every software release, in minutes.

More importantly, these efforts help our customers win. Big time. The ability to produce videos at scale using automation is critical in a world that demands more and more video. But it's also important to keep viewers engaged, not jarred out of their experience by something feeling too robotic.